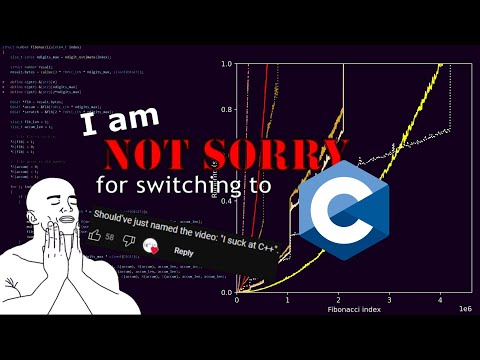

Sheafification of Fibsonicci, a response to the responses.

The goal of all of these algorithms is to (hopefully efficiently) compute

After cloning the repo, make sure to run

make initto initialise the output folders. This step is necessary before trying to build anything else.

To compute a particular Fibonacci number (using one of the supported implementations), use

# Generate binary

make bin/$(algo).hex.out

# Usage:

./bin/$(algo).hex.out $(fibonacci_index) $(output_file)

# If output_file is not provided, output is directed to stdoutDue to laziness, I have not implemented any intelligent conversion from hexadecimal to decimal.

If you don't jive with hex, you can convert the hex output with scripts/hex2dec.py.

./bin/$(algo).hex.out $(fibonacci_index) | python3 scripts/hex2dec.py $(output_file)

# If output_file is not provided, output is directed to stdoutBy default, hex2dec prints out at most 32 significant digits.

To print all digits, pass -n0 or --ndigits=0 as an argument.

The current algorithms have defaults that make the most sense on my hardware, but you can override some settings from make.

# CC: C compiler

# PY: Python interpreter

# DEFINES: C macro definitions

# (e.g., DIGIT_BIT: number of bits in a single "digit"

# of the large integer representation)

# FLAGS: C compiler flags

# AUTOHEADER_FLAGS: Flags passed to the header autogenerator

make CC=gcc-14 PY=python3.14 DEFINES="DEBUG DIGIT_BIT=$(bits)" FLAGS="-g" AUTOHEADER_FLAGS="--verbose" bin/$(whatever).outThere are, of course, other flags.

Warning

There is nothing scientific about how these algorithms are benchmarked. I promise no consistency between what you get, and what I present in videos.

To construct data for plotting, run

# To generate data for a specific algorithm

make data/$(algo).dat

# Or, to generate all data

make all-dataTo plot the data, a prerequisite is a configuration JSON file, having the following form.

{

"Name of algorithm (for legend)": {

"path": "data/$(algo).dat",

"colour": "$(some_matplotlib_colour)",

},

}Note

Paths to *.dat files are relative to the config JSON you define.

Tip

You can also plot against data files generated from the OG Fibsonicci project.

The anim script is able to parse either format.

You should then be able to run

python3 scripts/anim.py $(config).jsonIf you provide --output=foobar, then the plot animation will be saved to foobar.mp4, and the final plot to foobar.png.

Important

The anim script requires matplotlib, and ffmpeg (for saving .mp4s).

- The default compiler is

clang-18, because I like the taste of iron (and for other reasons), but this may not work on ARM64; see issue #1. The build should be fine withgcc, though.

The project includes the following implementations.

| Algorithm | Source (impl/) |

Runtime | Video |

|---|---|---|---|

| Naive | naive.c |

- | |

| "Linear" | linear.c |

[1] | |

| Fast exponentiation | fastexp{,2d}.c |

[2] | |

| Fast squaring | fastsquaring.c |

[2] | |

| NTT | ntt{,t}.c |

|

[3] |

Always need to have the nonrecursive "by definition" implementation present, for completeness:

def naive(n):

if n <= 1:

return n

return fib(n-1) + fib(n-2)The bottom-up iterative implementation, which involves

def linear(n):

a, b = 1, 0

for _ in range(n):

a, b = a+b, a

return bTip

Although the algorithm is called "linear" (referring to the number of iterations), the algorithm is

Based on the following identity:

we compute

Since all matrices involved are symmetric, we can represent these matrices as triples, with the following "multiplication":

The multiplication of integers is achieved with the simple

This "multiplication" is performed in three fused steps, as suggested by the expansion:

In the fast exponentiation algorithm, the

Now, the "multiplication" becomes

which we perform in three fused steps as well:

This is a "bottom-up" variant of fast exponentiation, which processes the bits of the index top-down. As a result, the transitions for set bits are much simpler (following only a single transition step of the Fibonacci sequence), and the main computation is iterative squaring:

We perform this squaring operation in two fused steps:

I've embarrassed myself enough with Karatsuba's multiplication algorithm before, so let's just fast-fourierward to an

The idea is pretty convoluted 😏.

We will represent our large integers in base

Suppose we are multiplying two large integers, written out as

Then, observe that their product

Note that these "digits" don't necessarily fit in the interval

Through a Fourier transform, we can convert these convolutions into digit-wise products in the frequency domain.

With

and similarly for

Normally, the idea is to embed the digits into the complex numbers, and then take